The case for using data analytics is clear. Overall, businesses that use data analytics report achieving 8% increases in profit, 10% reductions in operating costs, and average annual growth rates of 30%. Companies that succeed in their use of data analytics report margin improvements of 500 basis points or more in 12 months, but only one in six companies succeed with deployment.

Across industries, profit margins are highest for companies that have adopted artificial intelligence and use it strategically (Exhibit 1). The advantage is especially striking in financial services, where strategic users of AI average profit margins that exceed 10% (versus negative profit margins for other companies), and in health care, with profit margins above 15% for strategic users (versus losses for non-adopters of AI).

Exhibit 1

Companies with a strategy for leveraging data analytics are more profitable than others

Company leaders, however, see vast room to improve their ability to create value from data analytics. In a 2022 LNS Research survey on “analytics that matter,” more than half of the industrial companies surveyed (58%) said they were piloting or had implemented an analytics program. But among the companies who said so, only 17% said they were “seeing dramatic business impacts” from their analytics program. Based on our experience with industrial companies, we see several reasons why companies in this sector are struggling to make use of analytics and where changes would make a measurable difference in performance.

Where Industrial Companies Fall Short

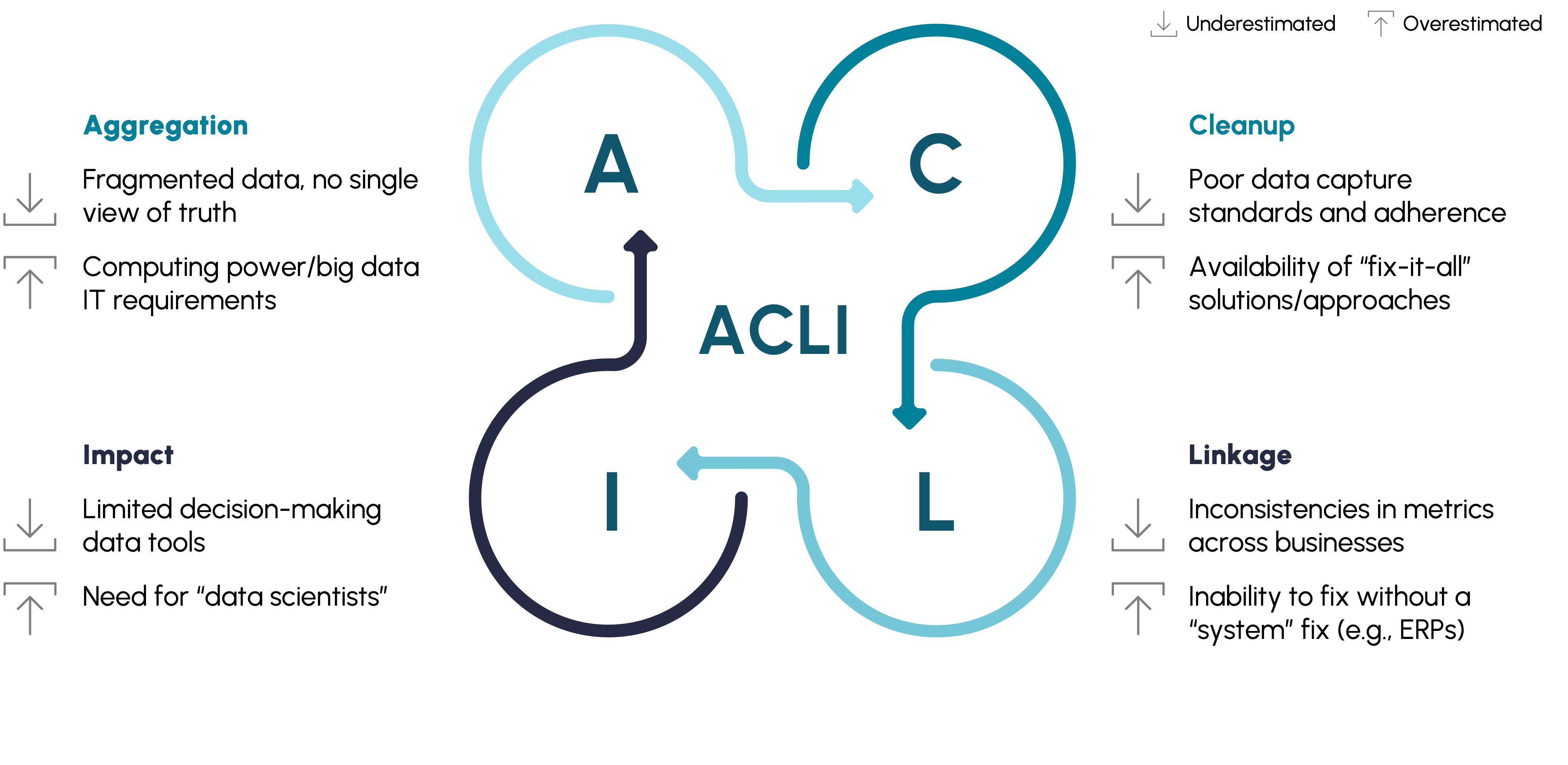

Companies, especially industrials, tend to run into problems with analytics in four key areas: data aggregation, data cleanup, data linkage, and data impact (Exhibit 2). The reasons include underestimating their needs in some areas and overestimating their current needs or capabilities required in others.

Exhibit 2

Most analytics endeavors—particularly in industrials—fall short infour areas

- Data aggregation suffers from companies underestimating their data collection and overestimating their needs for computational resources. They do not realize that their data are fragmented, limiting their ability to put together a single view of “truth.” Better data aggregation for even a single use case can create significant value. At the same time, companies overestimate their need for computing infrastructure to be able to meet the requirements for big data. Spending on sophisticated IT systems does not address problems with data.

- Data cleanup tends to underperform because standards are lax; companies rely too much on a handful of solutions that claim to take care of all cleanup issues. On the side of underestimation, standards for data capture are poor, and even these often are not adhered to. Companies also overestimate the availability of “fix it all” solutions and approaches.

- Data linkage falls short because metrics are inadequate or defined poorly. Companies underestimate the impact of inconsistent metrics across businesses—not surprisingly since it is difficult to find the right linkages between P&L performance and the KPIs that businesses typically track. For example, in pricing, not all businesses track discounting leakages by SKU or track poor margin realization due to exceptional order noncompliance. Or if they do track this, they don’t establish a connection to P&L margin performance. Too often, companies believe that the fix is one big system fix, such as a sophisticated ERP system. They underestimate the need for ordinary grunt work to come up with a holistic set of metrics and establish linkages to each P&L line item.

- Data impact is limited when companies underestimate their need for decision-making tools and overestimate their need for specialized data scientists. Not all companies invest the time and effort in developing real-time decision-making tools, which can be set up in a widely available tool such as Excel. For example, a pricing tool could price a SKU for a customer based on historical pricing and margin data at the SKU level and changes to raw material costs. One reason why companies shy away from developing these tools is the belief they need highly trained data scientists to do so. In reality, the need is simply the time and effort to gather and clean up the right historical data and collaboration across different functions to make sure that the data is accurate and updated in real time.

When companies embark on a digital/analytics/data transformation effort, they seldom view the problem in terms of challenges identified in data aggregation, cleanup, impact, and linkage elements. More often, they assume that this journey involves making massive system upgrades, hiring highly skilled data scientists, and coming up with fancy new use cases (typically disjointed from day-to-day performance). Such a response falls short of addressing these areas appropriately.

Getting Analytics Right

Companies driving value from data analytics leverage a playbook to address each of these areas. Improvements to data aggregation do not require an overhaul of the IT function. Changes to ERP systems are likely to be modest. Rather than a full system overhaul, companies can establish an application-based architecture on top of their legacy systems (Exhibit 3). The legacy system would include customer transaction, head count, and spending data for each facility, as well as data for financial systems. A data lake would aggregate data for measuring revenue, materials inventory, head count, and non-labor spending. This would be used to inform processes in the application layer, which is designed to deliver actionable data for diagnostics, continuous operations, and real-time decision tools.

Exhibit 3

Data aggregation can focus on application-based architecture, not a full system overhaul

The key to improving data cleanup is to start with the realization that the company need not be “held hostage” by bad data. Cleaning up the customer master has no magic fixes; it requires rigorous work. However, it can be done. The effort is more likely to have impact if the company prioritizes high-value areas—for example, cleaning up the master list of customers to analyze discounting and margins by channels at the customer level. In addition, it should also address data architecture (i.e., data collection, storage, transformation, and consumption) for the near and long term.

With regard to data linkage, companies need to track not just the changes in financial measures that result from improvement initiatives but also the absolute impact on the P&L, net of any leakages that might be occurring. Most companies assume that expected gains from their improvement initiatives will just show up on their P&L. A better approach is a holistic view of P&L line items, in which the company tracks all possible metrics and creates transparency on leakages that might be occurring elsewhere.

To improve data impact, decision makers need to clarify what “good” looks like—that is, reach an agreement about how data and analysis will apply to categories of decisions (e.g., what price to quote for a certain SKU to a customer, what discounts to offer, when to pay a certain supplier etc.), and what improvements in decisions they can pursue. Then the company needs to invest time and effort in developing real-time decision-making tools like a pricing tool. Instead of looking to hire data scientists to develop such tools, companies should look to reinforce capacity by adopting a build, operate, transfer (BOT) model with third-party partners.

Data analytics can deliver significant value, but this is not guaranteed. Companies that succeed report margin improvements of 500 basis points or more in 12 months, but only one in six companies succeed with deployment. To get the use of data analytics right, industrial companies need to know the high-value areas where data analytics can add real value to the business; often, these include customer experience, sales, pricing, and labor. To enjoy the benefits as soon as possible, companies can work now on the foundation: start fixing data infrastructure in the near term and establish data governance for the medium term. Finally, companies should close the loop to impact by measuring transparency against the plan’s definition of what good looks like, tracking the net impact of improvement initiatives on the P&L and ensuring that the company takes actions as needed to close the gap.